SeaweedFS 已经用docker compose的方式部署在生产环境内中了,对外只开放了一个S3的端口127.0.0.1:8333,然后前面套上Caddy的https代理,这样很安全了。

那进阶的要求又来了:

一、S3的pre signed的URL

由于程序之前是在AWS跑的,所以用了S3的最佳实践,pre sign url来进行上传和下载,那seaweedfs也是完全支持的,基本是无缝修改

给出验证程序:

import boto3

from botocore.client import Config

# Configure the S3 client to point to your SeaweedFS S3 gateway

s3_client = boto3.client(

's3',

endpoint_url='https://s3.rendoumi.com', # Replace with your SeaweedFS S3 gateway address

aws_access_key_id='aaaaaaaa',

aws_secret_access_key='bbbbbbb',

config=Config(signature_version='s3v4')

)

bucket_name = 'myfiles'

object_key = 'your-object-key'

expiration_seconds = 3600 # URL valid for 1 hour

# Generate a pre-signed URL for uploading (PUT)

try:

upload_url = s3_client.generate_presigned_url(

'put_object',

Params={'Bucket': bucket_name, 'Key': object_key, 'ContentType': 'application/octet-stream'},

ExpiresIn=expiration_seconds

)

print(f"Pre-signed URL for upload: {upload_url}")

except Exception as e:

print(f"Error generating upload URL: {e}")

# Generate a pre-signed URL for downloading (GET)

try:

download_url = s3_client.generate_presigned_url(

'get_object',

Params={'Bucket': bucket_name, 'Key': object_key},

ExpiresIn=expiration_seconds

)

print(f"Pre-signed URL for download: {download_url}")

except Exception as e:

print(f"Error generating download URL: {e}")

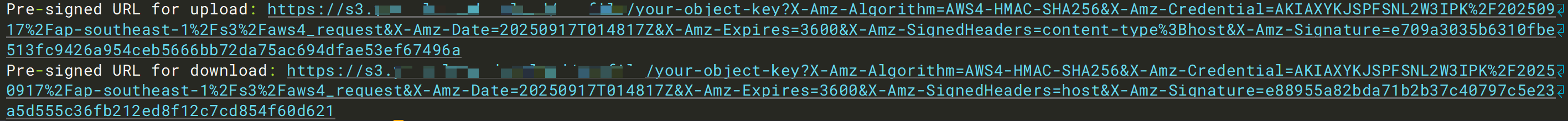

能看到临时生成的upload的URL和download的URL,是完美支持的

二、s3的域名代理

那aws的s3桶默认是有个域名的bucket.ap-southease-1……,前面也可以套上Cloudfront把桶做成静态网站的CDN

那迁移的话,做法也有两种,一是CDN厂家直接支持源端是S3桶,那这种就好办了,直接配置即可

第二种就是没有这功能,那只能把seaweedfs的filter的暴露出来,然后让CDN厂家http回源

那做法也很简单,修改docker -compose.yaml放开8888端口

services:

seaweedfs-s3:

image: chrislusf/seaweedfs

container_name: seaweedfs-s3

volumes:

- ./data:/data

- ./config/config.json:/seaweedfs/config.json

ports:

- "127.0.0.1:8333:8333"

- "127.0.0.1:8888:8888"

entrypoint: /bin/sh -c

command: |

"echo 'Starting SeaweedFS S3 server' && \

weed server -dir=/data -volume.max=100 -s3 -s3.config /seaweedfs/config.json"

restart: unless-stopped

然后用Caddy进行反代,Caddyfile如下:

s3.rendoumi.com {

reverse_proxy 127.0.0.1:8333

}

mybucket.s3.rendoumi {

rewrite * /buckets/mybucket{uri}

reverse_proxy http://127.0.0.1:8888

}

上面有一点要注意,seaweedfs暴漏出的8888端口可以看到所有桶,都在/buckets下,我们只想暴漏mybucket的话,就需要对url进行改写。

三、s3的cors

程序里居然还用到了s3的core,那比较麻烦了,万幸的是seaweedfs居然也支持!!!

链接:https://github.com/seaweedfs/seaweedfs/wiki/S3-CORS

我们只限制 mybucket 的桶的cors,先准备一个cors.xml文件

<CORSConfiguration>

<CORSRule>

<AllowedOrigin>https://www.rendoumi.com</AllowedOrigin>

<AllowedOrigin>https://mybucket.rendoumi.com</AllowedOrigin>

<AllowedOrigin>https://*.rendoumi.com</AllowedOrigin>

<AllowedOrigin>https://mybucket.fcdn.net</AllowedOrigin>

<AllowedMethod>GET</AllowedMethod>

<AllowedMethod>POST</AllowedMethod>

<AllowedMethod>PUT</AllowedMethod>

<AllowedMethod>HEAD</AllowedMethod>

<AllowedMethod>DELETE</AllowedMethod>

<AllowedHeader>*</AllowedHeader>

<ExposeHeader>ETag</ExposeHeader>

<ExposeHeader>Access-Control-Allow-Origin</ExposeHeader>

<MaxAgeSeconds>3600</MaxAgeSeconds>

</CORSRule>

</CORSConfiguration>

这个是真无语,因为minio的mc只支持这个模式,不支持json(或者是自己没找到json的配法)

然后用mc推进去即可

mc cors set mys3/mybucket /data/weedfs/cors.xml

mc cors get mys3/mybucket

这样就搞定了。