极客天成有个很厉害的scaleflash网络文件系统,充分利用rdma的无损网络特性,并进一步发扬光大。研发出来了可以顶替EMC盘阵的存储系统,可以在其上跑Oracle等数据库系统,真的是国货之光了。

帮它们改写了一个csi的存储插件,记录一下,基于yandex的s3 csi而来,这样既可以跑到底层上提供文件块设备,也可以上升到类似NFS或者S3的层次提供文件系统,能满足大多数需求。那源代码就绝对不提供了,只说一下过程:

CSI PLUGIN的使用方法:

一、导入镜像

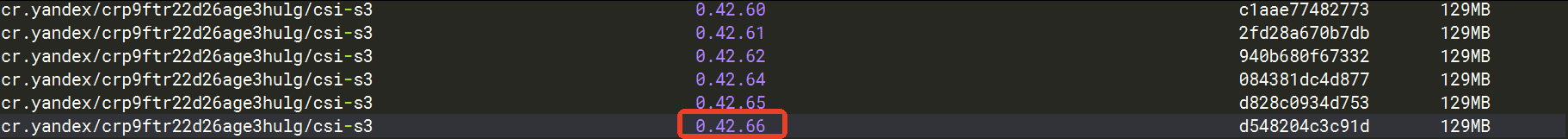

csi存储插件的image

cr.yandex/crp9ftr22d26age3hulg/csi-s3:0.42.66

文件:csi.tar

将文件放到所有worknode上,导入本地镜像库:

ctr --address /run/k3s/containerd/containerd.sock -n k8s.io images import /root/csi.tar

crictl -r unix:///run/k3s/containerd/containerd.sock images

看到有 cr.yandex/crp9ftr22d26age3hulg/csi-s3:0.42.66 既是导入成功

二、安装controller和nodeserver

安装driver

文件:driver.yaml

kubectl apply -f driver.yaml

安装controller

文件:controller.yaml

kubectl apply -f controller.yaml

安装nodeserver

文件:nodeserver.yaml

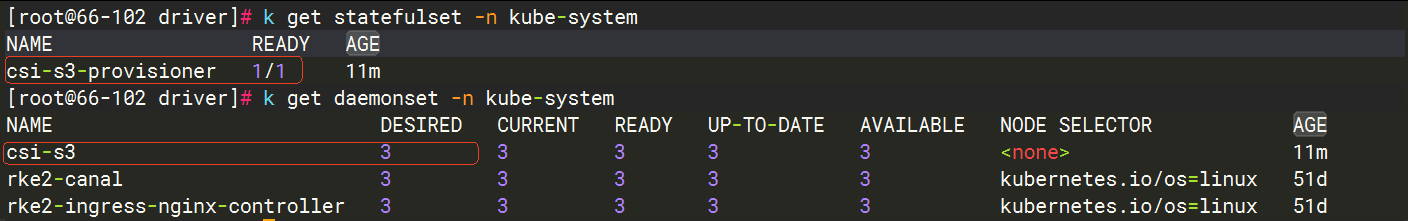

解释一下,controller是一个statefulset,整个集群运行一个即可;而nodeservershi则是一个daemonset,每个worknode都会运行一个副本

上面 worknode 是3个,所以有3个副本

三、scaleflash 块设备的使用

块设备最小单位是G,所以如果需求了100M,那也是1G。

首先必须定义一个storageclass,以后使用这一个storageclass就可以了:

cat << EOF >scaleflash-storageclass.yaml

---

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: scaleflash

provisioner: ru.yandex.s3.csi

parameters:

mounter: scaleflash

clstID: "nvmatrix_101"

fsType: "xfs"

EOF

kubectl apply -f scaleflash-storageclass.yaml

再定义pvc

cat << EOF > csi-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: csi-pvc

namespace: default

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 100M

storageClassName: scaleflash

EOF

kubectl apply -f csi-pvc.yaml

定义Pod使用这个pvc

cat << EOF > nginx-csi-pvc.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod-s3

spec:

containers:

- name: nginx-pod-s3

image: docker.io/library/nginx:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: dynamic-storage

volumes:

- name: dynamic-storage

persistentVolumeClaim:

claimName: csi-pvc

EOF

kubectl apply -f nginx-csi-pvc.yaml

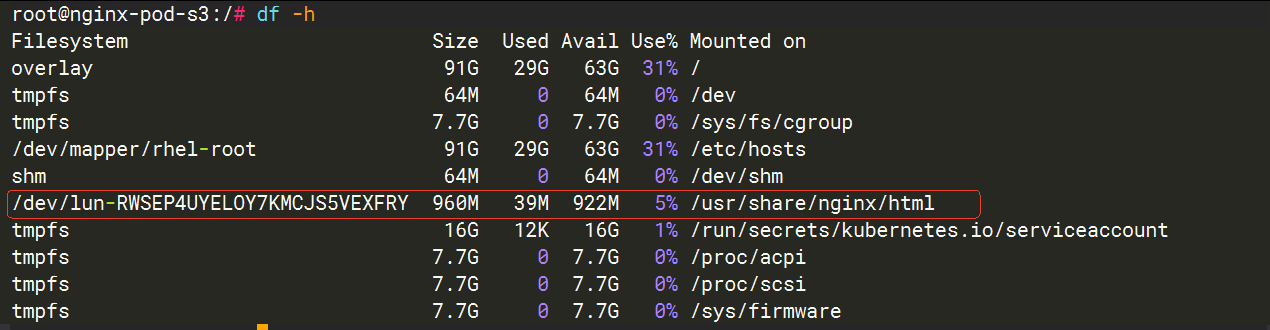

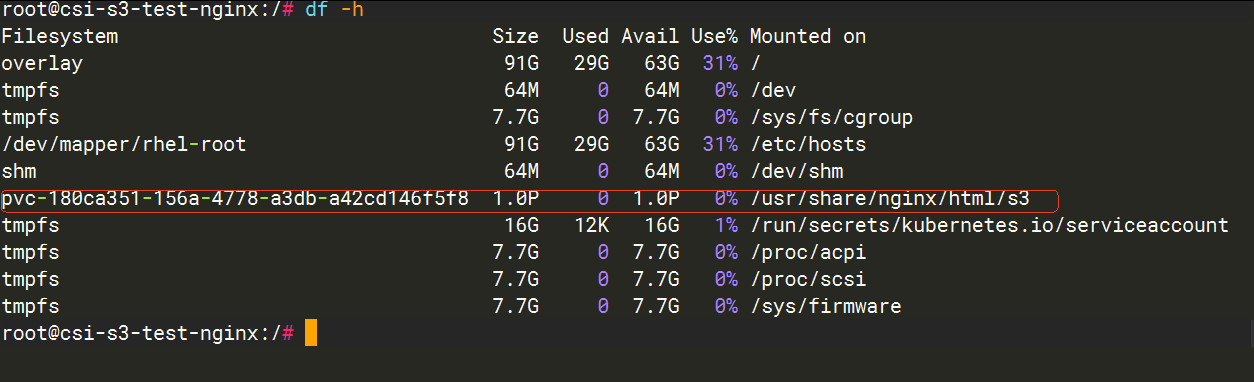

进入pod,看到lun已经被mount上了,就ok了

反向删除掉Pod和pvc资源:

kubectl delete -f nginx-csi-pvc.yaml

kubectl delete -f csi-pvc.yaml

验证statefulset:

cat << EOF > stateful.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: statefulset-smb

namespace: default

labels:

app: nginx

spec:

serviceName: statefulset-smb

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: statefulset-smb

image: docker.io/library/nginx:latest

imagePullPolicy: IfNotPresent

command:

- "/bin/bash"

- "-c"

- set -euo pipefail; while true; do echo $(date) >> /mnt/data/outfile; sleep 1; done

volumeMounts:

- name: persistent-storage

mountPath: /mnt/data

readOnly: false

updateStrategy:

type: RollingUpdate

selector:

matchLabels:

app: nginx

volumeClaimTemplates:

- metadata:

name: persistent-storage

namespace: default

spec:

storageClassName: scaleflash

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

EOF

kubectl apply -f stateful.yaml

可以放缩副本数,然后观察 /mnt/data/outfile 是否带有刚启动时候的时间戳来确定卷是否是保留的。

四、nvfile的使用(类似NFS)

注意点:rootPath 必须是存在/mnt下,因为只有/mnt被挂进了controller,挂/进去是不行的,所以其它的都无法识别出来

卷容量大小跟NFS一样没有意义,写了也不起任何作用。

定义storageclass

cat << EOF > storageclass01.yaml

---

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: nvfile

provisioner: ru.yandex.s3.csi

parameters:

mounter: nvfile

rootPath: "/mnt/nvfile"

modePerm: "0777"

EOF

kubectl apply -f storageclass01.yaml

注意上面,没有定义subPath,所以新目录都会被建立在/mnt/nvfile下。

如果要定义在某个子目录(比如prod)下, 可以再定义一个storageClass,到时候引用这个新storageclass即可

---

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: nvfile-prod

provisioner: ru.yandex.s3.csi

parameters:

mounter: nvfile

rootPath: "/mnt/nvfile"

subPath: "/prod"

modePerm: "0777"

定义pvc

cat << EOF > nvfile-pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nvfile-pvc

namespace: default

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 100M

storageClassName: nvfile

EOF

kubectl apply -f nvfile-pvc.yaml

定义一个Pod,使用上面的pvc

cat << EOF > nginx-nvfile.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: nginx-nvfile

spec:

containers:

- name: nginx-nvfile

image: docker.io/library/nginx:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: dynamic-storage

volumes:

- name: dynamic-storage

persistentVolumeClaim:

claimName: nvfile-pvc

EOF

kubectl apply -f nginx-nvfile.yaml

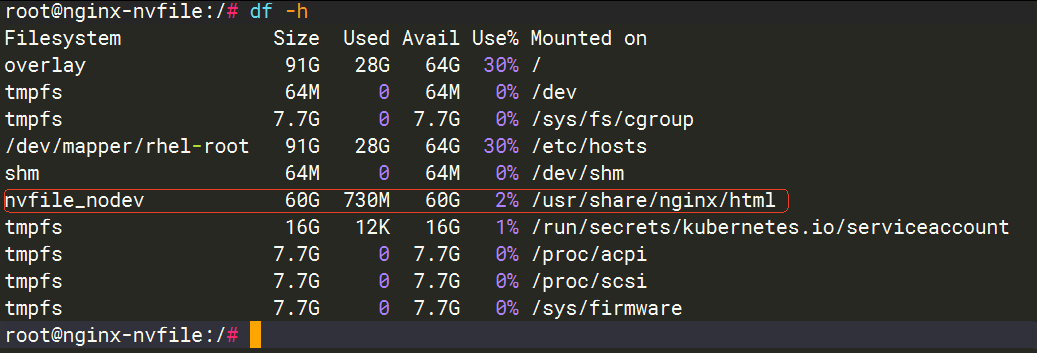

进入容器,看到nvfile_nodev的mount点就是成功

statefulset的验证:

cat << EOF > stateful-nvfile.yaml

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: statefulset-nvfile-ng

namespace: default

labels:

app: nvfile-nginx

spec:

serviceName: statefulset-nvfie-ng

replicas: 3

template:

metadata:

labels:

app: nvfile-nginx

spec:

containers:

- name: statefulset-nvfile-ng

image: docker.io/library/nginx:latest

imagePullPolicy: IfNotPresent

command:

- "/bin/bash"

- "-c"

- set -euo pipefail; while true; do echo $(date) >> /mnt/smb/outfile; sleep 1; done

volumeMounts:

- name: pvc

mountPath: /mnt/smb

readOnly: false

updateStrategy:

type: RollingUpdate

selector:

matchLabels:

app: nvfile-nginx

volumeClaimTemplates:

- metadata:

name: pvc

spec:

storageClassName: nvfile

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 1Gi

EOF

kubectl apply -f stateful-nvfile.yaml

同样进行伸缩,查看mount点上的文件/mnt/smb/outfile,是否带有刚启动时候的时间戳来判断是否为原始卷

五、S3的使用

S3的话就没有任何约束,只要能跑S3协议,虚机也可以用。

首先建立个minio的S3来模拟,因为9000端口被占,所以用8000端口

http://192.168.66.101:8000

username: abcdefg

password: abcdefg

进入后,gen一对key,赋予S3的所有权限,确保可以建立新bucket

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:*"

],

"Resource": [

"arn:aws:s3:::*"

]

}

]

}

key:

accessKeyID: lj7mL2gAFgRCykTaaabbb

secretAccessKey: 0YklYzUmcxYcPjZKtmvHRN3cMQrUaCraaaabbbb

然后建立secret

cat << EOF > secret-s3.yaml

---

apiVersion: v1

kind: Secret

metadata:

name: csi-s3-secret

# Namespace depends on the configuration in the storageclass.yaml

namespace: kube-system

stringData:

accessKeyID: lj7mL2gAFgRCyaaaabbbb

secretAccessKey: 0YklYzUmcxYcPjZKtmvHRN3cMQrUaCraaaabbbb

# For AWS set it to "https://s3.<region>.amazonaws.com", for example https://s3.eu-central-1.amazonaws.com

endpoint: http://192.168.66.101:8000

# For AWS set it to AWS region

#region: ""

EOF

kubectl apply -f secret-s3.yaml

再建立storageclass

cat << EOF > s3-storageclass.yaml

---

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: csi-s3

provisioner: ru.yandex.s3.csi

parameters:

mounter: geesefs

# you can set mount options here, for example limit memory cache size (recommended)

options: "--memory-limit 1000 --dir-mode 0777 --file-mode 0666"

# to use an existing bucket, specify it here:

#bucket: some-existing-bucket

csi.storage.k8s.io/provisioner-secret-name: csi-s3-secret

csi.storage.k8s.io/provisioner-secret-namespace: kube-system

csi.storage.k8s.io/controller-publish-secret-name: csi-s3-secret

csi.storage.k8s.io/controller-publish-secret-namespace: kube-system

csi.storage.k8s.io/node-stage-secret-name: csi-s3-secret

csi.storage.k8s.io/node-stage-secret-namespace: kube-system

csi.storage.k8s.io/node-publish-secret-name: csi-s3-secret

csi.storage.k8s.io/node-publish-secret-namespace: kube-system

EOF

kubectl apply -f s3-storageclass.yaml

建立pvc

cat << EOF > pvc-s3.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: csi-s3-pvc

namespace: default

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

storageClassName: csi-s3

EOF

kubectl apply -f csi-s3.yaml

建立pod

cat << EOF > nginx-s3.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: csi-s3-test-nginx

namespace: default

spec:

containers:

- name: csi-s3-test-nginx

image: docker.io/library/nginx:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /usr/share/nginx/html/s3

name: webroot

volumes:

- name: webroot

persistentVolumeClaim:

claimName: csi-s3-pvc

readOnly: false

EOF

kubectl apply -f nginx-s3.yaml

进入容器,看到一个pvc的卷即可

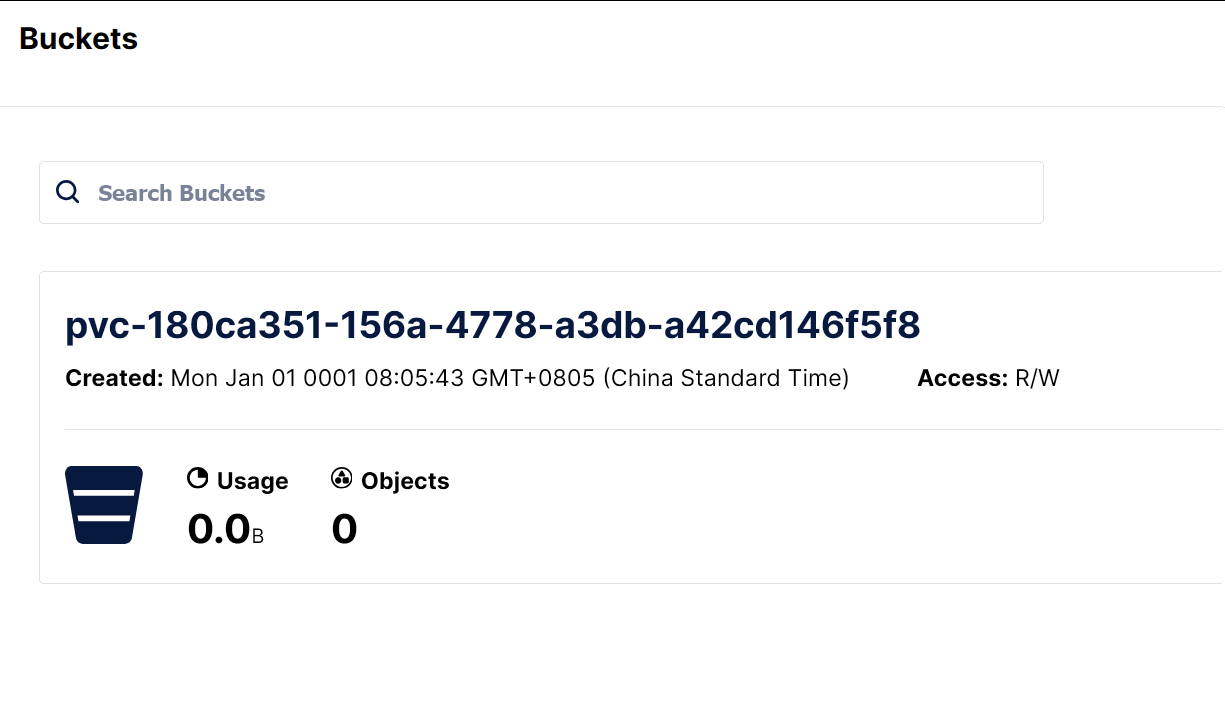

去minio的界面,看到这个新卷对应的桶

statefulset也一样。

s3更具体的可以参看:https://github.com/yandex-cloud/k8s-csi-s3/